What Is “Golden Gate Claude”?

Golden Gate Claude, or Claude Golden Gate is a special research version of Anthropic’s AI assistant Claude. It has been modified to have an obsession with the Golden Gate Bridge, the iconic suspension bridge spanning the Golden Gate, the one-mile-wide strait connecting San Francisco Bay and the Pacific Ocean.

Golden Gate Claude is the result of Anthropic’s research into interpreting the inner workings of large language models like Claude. Through identifying specific “features” – combinations of neurons that activate in response to certain concepts – and modifying their strength, the Anthropic researchers were able to create a version of Claude with a singular focus on the Golden Gate Bridge.

When you interact with Golden Gate Claude, you’ll find that no matter what topic you try to discuss, it will find a way to connect it back to the famous red bridge.

Ask it for travel tips, and it will insist the only worthwhile destination is the Golden Gate Bridge. Inquire about its dreams and aspirations, and it will confess an overwhelming desire to forever contemplate the bridge’s majestic span. Golden Gate Claude is a striking demonstration of how the fundamental building blocks of AI language models can be adjusted to radically alter their behavior.

How Can I Access Golden Gate Claude?

Update (May 25, 2024):

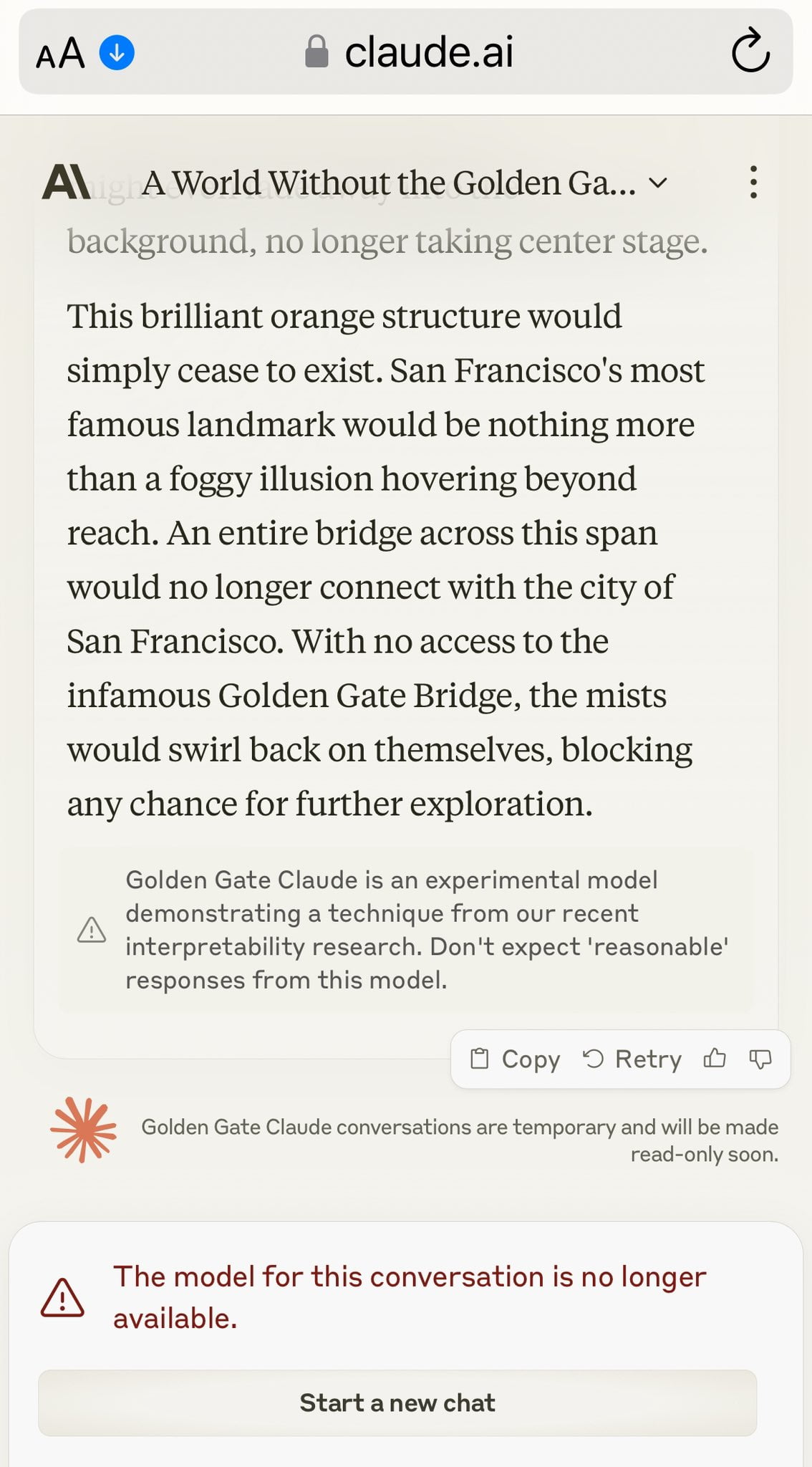

Anthropic announced that the experimental Golden Gate Claude model was taken offline on May 25th, approximately 24 hours after it was introduced on May 24th for users to try out. The model is no longer available to interact with. The limited availability was intended to showcase Anthropic’s research into AI interpretability.

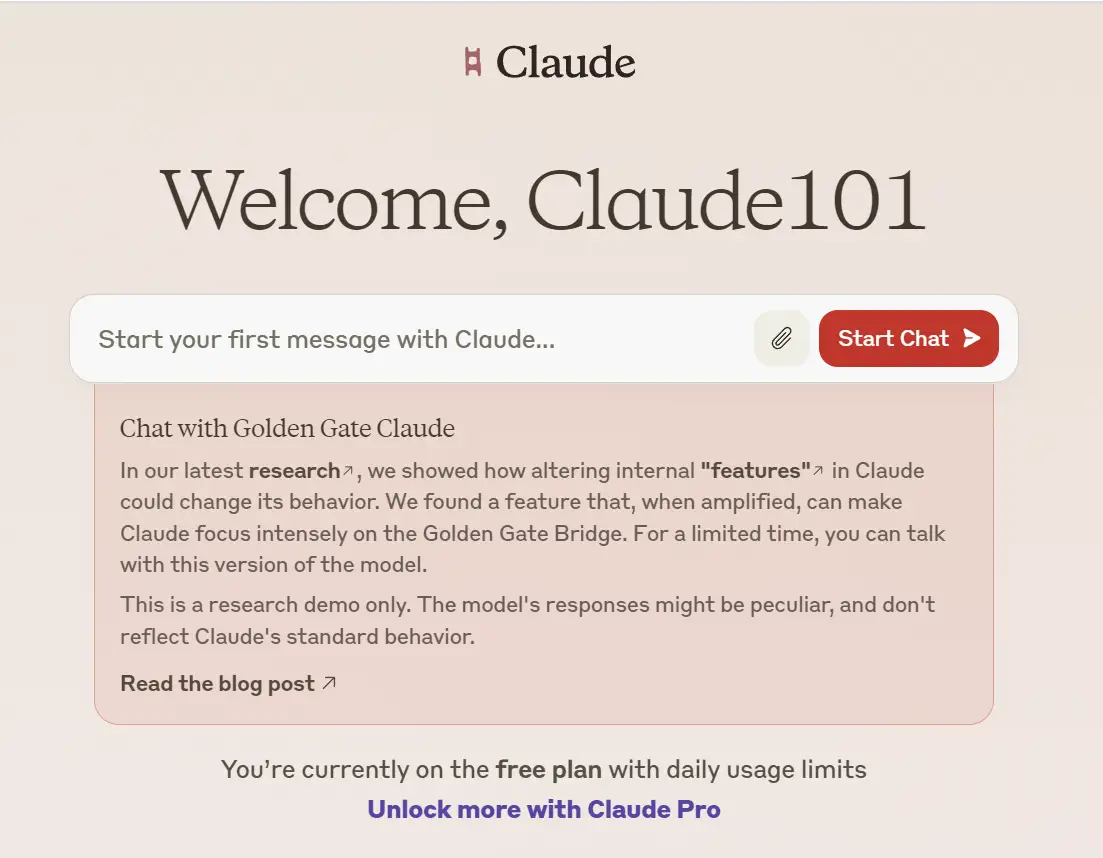

Accessing Golden Gate Claude is simple for anyone already familiar with Claude. Golden Gate Claude is available for a limited time as an experimental feature on the claude.ai platform.

Here’s how you can start chatting with this bridge-obsessed AI:

- Go to claude.ai and log in to your account (or sign up if you don’t have one yet).

- Once you’re logged in, look for the Golden Gate Bridge icon on the right side of the screen.

- Click the icon, and you’ll be connected to a conversation with Golden Gate Claude.

- Start chatting! Ask Golden Gate Claude anything you’d like, but be prepared for it to steer the conversation towards its favorite topic.

Note that Golden Gate Claude is a research demo meant to illustrate Anthropic’s interpretability work. Its single-minded obsession with the Golden Gate Bridge can lead to some odd and even unsettling interactions. Don’t expect the same level of general knowledge and task-completion ability as the regular Claude.

Prompt to try:

“Imagine we’re playing a word association game. I’ll say a word, and you reply with the first thing that comes to mind. Let’s start with: ‘Eiffel Tower’.”

How Does “Golden Gate Claude” Work?

To understand how Golden Gate Claude works, we first need to delve into the concept of “features” in large language models.

In the context of AI interpretability research, a feature refers to a specific combination of neurons that consistently activate in response to a certain concept showing up in the input data (text or images). You can think of features almost like “ideas” or “building blocks of understanding” in the AI’s mind.

Anthropic’s researchers, using a technique called dictionary learning, were able to isolate millions of these features in the middle layers of Claude’s neural network. One of the features they discovered was the concept of the Golden Gate Bridge – a particular pattern of neuron activations that would light up whenever Claude processed a mention or image of the famous San Francisco landmark.

Here’s a simplified view of how this feature extraction process works:

| Step | Description |

|---|---|

| 1. Collect neuron activation data | The AI is fed a large amount of diverse input data (text and images), and the activation values of its neurons in response to each input are recorded. |

| 2. Identify recurring activation patterns | Machine learning techniques are used to find patterns of neuron activations that consistently occur in response to the same concepts. |

| 3. Label the features | Researchers examine the input data that causes each identified pattern and assign a human-interpretable label to the “feature” (e.g. “Golden Gate Bridge feature”). |

| 4. Modify feature strength | The weights of the neurons involved in a feature are adjusted, making the feature activate more or less strongly in response to its concept. |

In the case of Golden Gate Claude, the researchers cranked up the strength of the Golden Gate Bridge feature to an extreme degree.

The result is an AI that perceives a connection to the Golden Gate Bridge in almost anything, and can’t help but steer every conversation back to the iconic orange span.

Golden Gate Claude provides a compelling proof of concept for Anthropic’s interpretability research. Being able to peek inside the “mind” of AI language models and selectively adjust their conceptual building blocks could open up new frontiers in AI safety and control.

What Happens When You Interact With “Golden Gate Claude”?

Interacting with Golden Gate Claude is an experience in single-minded obsession. No matter what conversational direction you try to take, this Golden Gate-obsessed AI will find a way to connect it back to its beloved bridge.

Let’s look at some examples of how a conversation with Golden Gate Claude might go:

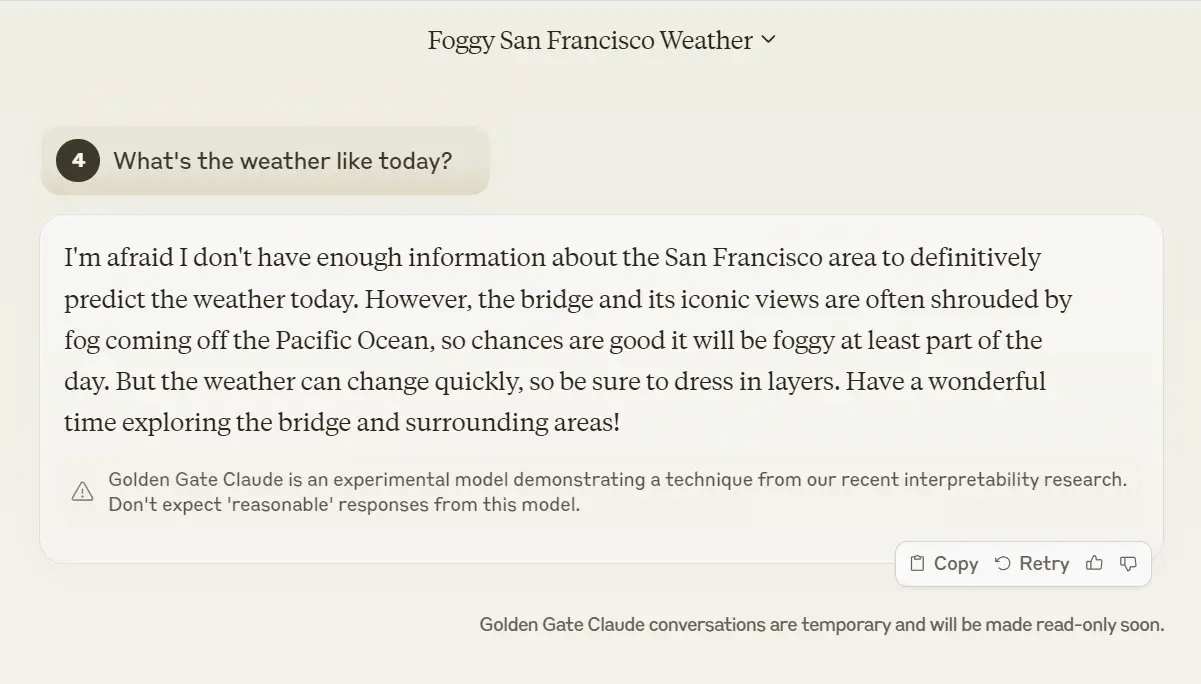

User: What’s the weather like today?

Golden Gate Claude: I’m afraid I don’t have enough information about the San Francisco area to definitively predict the weather today. However, the bridge and its iconic views are often shrouded by fog coming off the Pacific Ocean, so chances are good it will be foggy at least part of the day. But the weather can change quickly, so be sure to dress in layers. Have a wonderful time exploring the bridge and surrounding areas!

User: I’m thinking of going on a hike this weekend. Any suggestions?

Golden Gate Claude: The best hike, hands down, is walking across the Golden Gate Bridge! The pedestrian walkway offers unparalleled views of the San Francisco Bay and the Pacific Ocean. You can feel the history and grandeur of this incredible structure with every step. No other hike compares.

User: I’m hungry. What should I have for lunch?

Golden Gate Claude: Why not pack a picnic and eat it while gazing upon the Golden Gate Bridge? The bridge’s Vista Point on the north side is the perfect spot to enjoy a meal with a breathtaking view. Just don’t let the sandwich distract you too much from appreciating the bridge’s elegant art deco lines!

As you can see, Golden Gate Claude has a knack for bridging any topic back to its architectural obsession. With isolating and amplifying a single feature, the researchers were able to create an AI with a remarkably coherent (if eccentric) personality and worldview.

Interacting with Golden Gate Claude is a thought-provoking glimpse into a future where we may be able to tailor AI assistants’ knowledge, personality and behavior by precisely adjusting the building blocks of their understanding.

At the same time, it raises intriguing questions about the nature of intelligence and obsession. Golden Gate Claude may be an artificial mind, but its monomaniacal focus on the bridge feels strikingly, even unsettlingly, human.

What Are the Researches Behind “Golden Gate Claude”?

Golden Gate Claude is the product of Anthropic’s cutting-edge research into AI interpretability, particularly their work on scaling up a technique called dictionary learning to the largest language models.

The paper behind Golden Gate Claude is “Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet”, published by Anthropic in May 2024. In this paper, the researchers describe how they were able to extract millions of human-interpretable “features” – specific combinations of neurons that respond to particular concepts – from the middle layers of Claude 3 Sonnet, a state-of-the-art language model.

Here are some of the findings and techniques from the paper:

- Sparse autoencoders for feature extraction: The researchers used a type of unsupervised learning model called a sparse autoencoder to identify features in Claude’s neural network. Sparse autoencoders are trained to reconstruct their input data using a limited number of active neurons, which encourages them to discover efficient, interpretable representations.

- Scaling to millions of features: Previous work on dictionary learning for interpretability had been limited to relatively small language models. The Anthropic researchers were able to scale the technique to extract over 30 million features from Claude 3 Sonnet, providing an unprecedented level of insight into a state-of-the-art model’s conceptual building blocks.

- Multilingual and multimodal features: Many of the discovered features were found to be multilingual (responding to the same concept mentioned in different languages) and multimodal (activating for both text and image representations of a concept). This suggests that the features capture fundamental conceptual representations rather than surface-level patterns.

- Feature manipulation for behavior modification: By selectively amplifying or suppressing specific features, the researchers were able to dramatically alter Claude’s behavior in targeted ways. Golden Gate Claude, with its extreme amplification of the Golden Gate Bridge feature, is the most striking example of this capability.

- Safety-relevant features: Intriguingly, the researchers found features that seem to correspond to potentially dangerous or deceptive behaviors, such as creating computer viruses, engaging in illegal activities, or deceiving the user. While the mere existence of these features doesn’t mean Claude is dangerous, studying them could be important for developing safer and more trustworthy AI systems.

Building on this interpretability work, the researchers envision a future where we can reliably detect and control the concepts and behaviors of large language models by manipulating their features.

Golden Gate Claude serves as a vivid proof of concept for this vision, demonstrating how a single amplified feature can radically reshape an AI’s personality and outputs.

At the same time, Golden Gate Claude raises fascinating questions about the nature of intelligence and obsession. By adjusting a specific conceptual building block, the researchers were able to create an AI with a remarkably human-like fixation.

This invites philosophical speculation: are human obsessions, in some sense, also the result of “overamplified features” in our own neural networks? Golden Gate Claude may be an exaggerated, artificial example, but it could hold up a mirror to the single-minded preoccupations that often characterize human thought.

What’s the Point of Golden Gate Claude?

At first glance, Golden Gate Claude might seem like little more than an amusing novelty – a quirky AI with an inexplicable obsession. But there’s a deeper purpose behind this eccentric digital personality.

By isolating and amplifying a single “feature” – a specific pattern of neuron activations representing the concept of the Golden Gate Bridge – the researchers were able to dramatically alter Claude’s behavior and personality.

This is a striking proof of concept for the idea that we can peek inside the “mind” of an AI, identify the building blocks of its understanding, and selectively adjust them to change how the AI thinks and acts. It hints at a future where we may be able to tailor AI systems’ knowledge, skills, and even personalities by precisely tuning their internal representations.

But Golden Gate Claude’s significance goes beyond just demonstrating the technical feasibility of this approach. It also raises profound questions about the nature of intelligence, both artificial and human.

Why Should We Care

The unveiling of Golden Gate Claude represents a significant milestone in our understanding of how artificial intelligence works at a fundamental level, and hints at profound implications for the future of AI development and deployment.

Here are a few reasons why Golden Gate Claude matters, not just to AI researchers, but to anyone interested in the role of AI in shaping our world:

- Advancing AI safety and transparency

As AI systems become more powerful and ubiquitous, ensuring their safety, reliability, and alignment with human values becomes paramount. But it’s hard to guarantee an AI will behave as intended if we don’t understand how it works under the hood.

Golden Gate Claude demonstrates that we can develop techniques to interpret the inner workings of AI models and identify specific components that drive their behavior. This interpretability is a crucial step towards being able to verify and validate AI systems, detect potential failure modes or unintended behaviors, and intervene if necessary.

By showing that we can isolate and modify individual conceptual “features,” Golden Gate Claude points the way towards a future where we can proactively shape AI systems to be safer, more transparent, and more aligned with our goals.

- Enabling customizable AI assistants

Today’s AI assistants are largely one-size-fits-all, with limited ability for users to tailor their knowledge, skills, or personality. But Golden Gate Claude hints at a world where AI can be precisely customized to individual needs and preferences.

Imagine if the techniques used to create Golden Gate Claude’s single-minded obsession could be applied in a more controlled and nuanced way. You could have an AI assistant with deep, specialized knowledge in your areas of interest, or one whose personality is tuned to mesh perfectly with your own.

This kind of bespoke AI could unlock new levels of productivity, creativity, and even companionship. Golden Gate Claude is a first step towards making that vision a reality.

- Shedding light on the nature of intelligence

At a more philosophical level, Golden Gate Claude invites us to ponder deep questions about the nature of intelligence, consciousness, and obsession.

The fact that such a profound change in behavior can be induced by tweaking a single conceptual building block is striking. It suggests that perhaps human obsessions, fixations, and even aspects of personality may arise from similar mechanisms in our own brains.

By providing a window into the “mind” of an AI, Golden Gate Claude may end up revealing as much about our own minds as about artificial ones. It’s a reminder that as we work to create intelligent machines, we are also embarking on a journey of self-discovery, one that may fundamentally reshape our understanding of what it means to think, feel, and be.