What Is GPT-4o?

GPT-4o is OpenAI’s latest iteration of their powerful language model, introduced on May 13, 2024. The “o” in GPT-4o stands for “omni,” reflecting the model’s ability to reason across multiple modalities, including voice, text, and vision, in real-time. This marks a significant step towards more natural human-computer interaction.

GPT-4o builds upon the capabilities of GPT-4, offering GPT-4-level intelligence while being much faster and more efficient. It improves on text, vision, and audio capabilities, making it a versatile tool for a wide range of applications.

How and When Will OpenAI’s GPT-4o Be Made Available?

One of the most exciting aspects of GPT-4o is its broad accessibility. Unlike the initial release of GPT-4, which was limited to ChatGPT Plus subscribers, GPT-4o is being made available to all ChatGPT users, including those on the free tier.

OpenAI is rolling out GPT-4o’s capabilities iteratively. Text and image capabilities are starting to roll out in ChatGPT today, with GPT-4o available in the free tier and to Plus users with up to 5x higher message limits. A new version of Voice Mode with GPT-4o will be released in alpha within ChatGPT Plus in the coming weeks.

Developers can also now access GPT-4o in the API as a text and vision model. GPT-4o is 2x faster, half the price, and has 5x higher rate limits compared to GPT-4 Turbo. Support for GPT-4o’s new audio and video capabilities will be launched to a small group of trusted API partners in the coming weeks.

What Are the Technical Specifications of GPT-4o?

OpenAI has not released full technical details on GPT-4o, but we do know some specifications:

- Average audio response latency of 320ms, down from 5.4s in GPT-4

- 2x faster and 50% cheaper than GPT-4 Turbo, with 5x rate limits

- Significantly better than GPT-4 Turbo in non-English languages

- Trained end-to-end across text, vision, and audio in a single neural network

OpenAI spent significant effort over the last two years on efficiency improvements at every layer of the stack to make a GPT-4 level model available much more broadly through GPT-4o. The full model size, architecture, and training details have not been disclosed.

What Are the Main New Features of GPT-4o?

GPT-4o introduces several groundbreaking features that enhance its usability and performance:

- Multimodal capabilities: GPT-4o can process and generate a combination of text, images, and audio. Users can upload images or documents containing visuals and engage in conversations about the content.

- Real-time voice interaction: GPT-4o enables natural, real-time voice conversations with human-like response times averaging 320ms. Users can interrupt the model and express emotions, with GPT-4o detecting vocal cues.

- Enhanced language support: GPT-4o offers improved quality and speed across 50+ languages, making it more accessible worldwide.

- Advanced reasoning and memory: With a larger context window and better reasoning abilities, GPT-4o can tackle complex problems, provide more accurate answers, and recall details from past interactions for richer conversations.

- Improved visual understanding: GPT-4o achieves state-of-the-art performance on visual perception benchmarks. It can analyze images, screenshots, and even live video to assist users.

- Emotional intelligence: GPT-4o can detect emotions in text and voice, and respond with appropriate vocal tones and expressions.

These features combine to create a more engaging, intuitive, and versatile AI assistant that can handle a wide variety of tasks and interactions.

How Does GPT-4o Compare to GPT-4 and Claude 3?

GPT-4o represents a significant step in AI capabilities, building upon the strengths of its predecessor, GPT-4, while introducing new features that set it apart from competitors like Anthropic’s Claude 3.

Here’s a summary table comparing the features of GPT-4o, GPT-4, and Claude 3 Opus:

| Feature | GPT-4o | GPT-4 | Claude 3 Opus |

|---|---|---|---|

| Multimodal capabilities | Text, images, audio, video | Text, images | Text |

| Real-time voice interaction | Yes, with 320ms average response time | No | No |

| Language support | 50+ languages with improved quality and speed | Wide range, but may not match GPT-4o in non-English languages | English, Japanese, Spanish, French |

| Speed | 2x faster than GPT-4 Turbo | Slower than GPT-4o | Faster than GPT-4 |

| Cost for developers | 50% cheaper than GPT-4 Turbo | More expensive than GPT-4o | Available in 3 Claude-3 model tiers |

| Context window | 128K tokens, Same as GPT-4 | 128k tokens | 200k tokens |

| Performance on text benchmarks | GPT-4 Turbo-level performance on text, reasoning, and coding intelligence | Outperformed by GPT-4o and Claude 3 Opus | Outperforms GPT-4 |

| Performance on vision benchmarks | State-of-the-art performance on visual perception benchmarks | Outperformed by GPT-4o | Not applicable (text-only model) |

| Performance on audio benchmarks | Sets new high scores on multilingual and audio capabilities | Outperformed by GPT-4o | Not applicable (text-only model) |

| Emotional intelligence | Can detect emotions in text and voice, and respond with appropriate vocal tones and expressions | Limited emotional intelligence compared to GPT-4o | Not disclosed |

| Safety and alignment | Extensive testing and iteration to mitigate potential risks, with safety built-in by design across modalities | Outperformed by GPT-4o in terms of safety considerations for new modalities | Focuses on transparency and control using constitutional AI techniques |

Now let’s take a closer look at how these cutting-edge models compare across several key aspects.

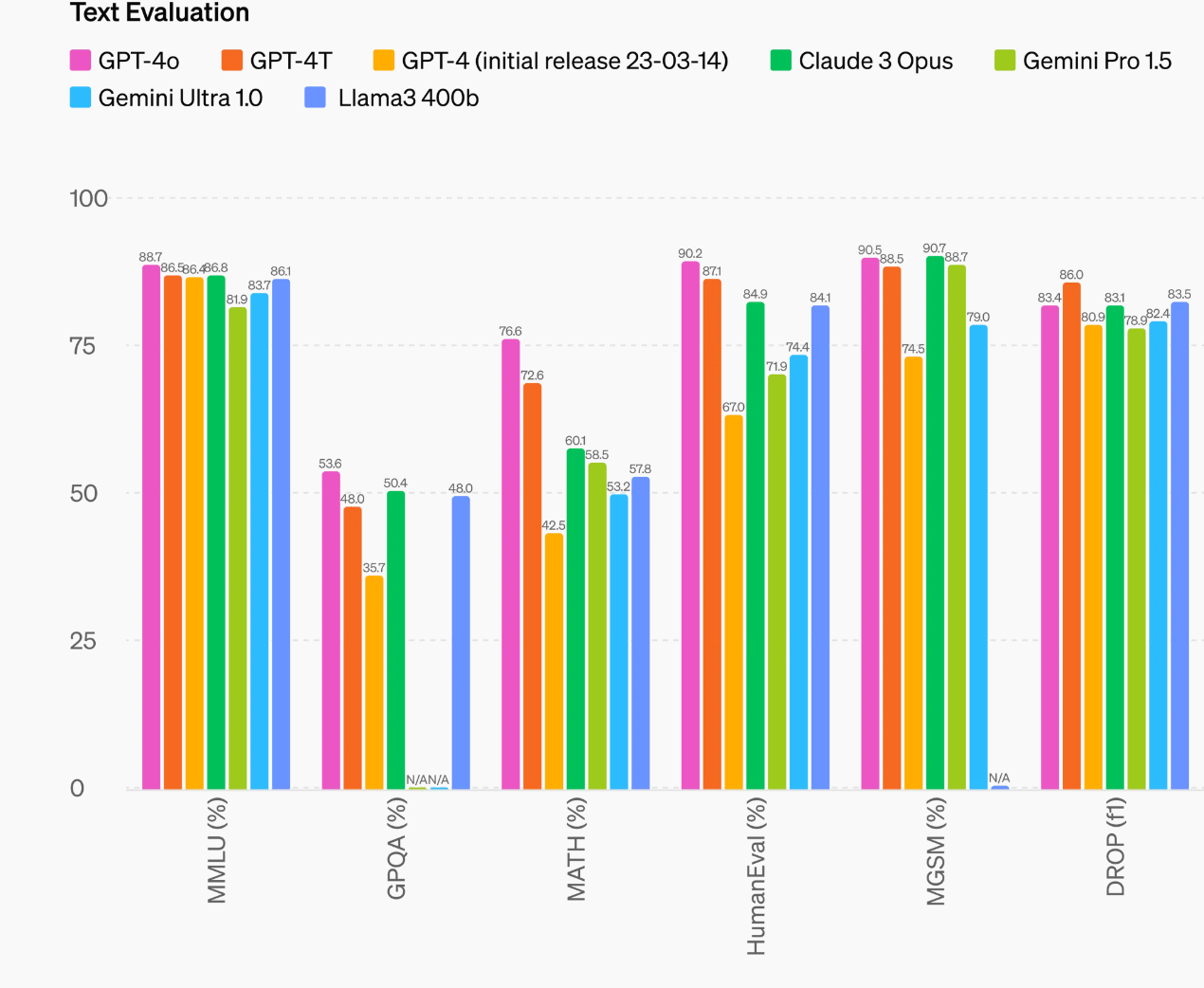

Performance on Benchmarks

GPT-4o demonstrates impressive performance on traditional benchmarks, achieving:

- GPT-4 Turbo-level performance on text, reasoning, and coding intelligence

- New high scores on multilingual, audio, and vision capabilities

- State-of-the-art performance on visual perception benchmarks

On the 0-shot COT MMLU benchmark, which tests general knowledge, GPT-4o sets a new high score of 88.7%. It also achieves a new high score of 87.2% on the traditional 5-shot no-CoT MMLU. These results highlight GPT-4o’s enhanced reasoning abilities compared to previous models.

In comparison, Anthropic’s benchmark results show Claude 3 Opus outpacing GPT-4, and Claude 3 Sonnet performing better than GPT-3.5. And you should know that these benchmarks were conducted before the release of GPT-4o, so a direct comparison is not yet available.

Speed and Efficiency

One of the most significant improvements in GPT-4o is its speed and efficiency compared to GPT-4:

- 2x faster than GPT-4 Turbo

- 50% cheaper for developers to implement

- 5x higher rate limits compared to GPT-4 Turbo

These enhancements mean that users can enjoy faster interactions with GPT-4o, while developers can create more cost-effective implementations. The higher rate limits also enable more extensive usage without hitting restrictions.

In contrast, while Claude 3 is faster than GPT-4, it remains to be seen how it compares to the speed and efficiency of GPT-4o. As more information becomes available, it will be interesting to see how these models stack up in terms of performance per dollar.

Multimodal Capabilities

GPT-4o’s multimodal capabilities are a key differentiator, allowing it to process text, images, and audio within a single model. This enables more natural interactions, such as:

- Discussing images uploaded by users

- Engaging in real-time voice conversations

- Analyzing live video feeds

These features open up new possibilities for AI-assisted tasks, from language learning and accessibility to creative collaboration and customer support.

In comparison, GPT-4 primarily focuses on text and images, while Claude 3’s capabilities are currently centered around text processing. Anthropic has not announced plans for multimodal features in Claude 3 at this time, giving GPT-4o a significant advantage in this area.

Language Support

GPT-4o boasts improved quality and speed across 50+ languages, making it more accessible to users worldwide. This is a notable improvement over GPT-4, which supports a wide range of languages but may not match GPT-4o’s performance in non-English languages.

Claude 3 currently supports English, Japanese, Spanish, and French, which is more limited compared to GPT-4o and GPT-4. As language models continue to evolve, comprehensive language support will be crucial for global adoption and usability.

Context Window

The context window refers to the maximum number of tokens a model can process in a single conversation or document. A larger context window allows the model to understand and maintain coherence across longer passages of text.

GPT-4o and GPT-4 have a context window of 128,000 tokens, which is significantly larger than GPT-3.5 Turbo’s 16,385 tokens. This means that GPT-4o and GPT-4 can handle much longer conversations and documents without losing context or coherence.

Claude 3 Opus, on the other hand, boasts an even larger context window of 200,000 tokens. This gives Claude 3 an advantage in tasks that require processing and understanding very long texts, such as analyzing extensive code bases or maintaining consistency across lengthy conversations.

Here’s a summary table comparing the known context window sizes:

| Model | Context Window |

|---|---|

| GPT-4o | 128k tokens |

| GPT-4 | 128k tokens |

| Claude 3 Opus | 200k tokens |

| GPT-3.5 Turbo | 16.3k tokens |

Safety and Alignment

Both OpenAI and Anthropic have emphasized the importance of developing safe and aligned AI systems.

GPT-4o has undergone extensive testing and iteration to mitigate potential risks, with safety built-in by design across modalities. OpenAI has also worked with external experts to identify and address risks introduced or amplified by GPT-4o’s new modalities.

Anthropic has taken a different approach with Claude 3, using constitutional AI techniques to create a more transparent and controllable model. This includes training the model to behave in accordance with specific principles and values, as well as providing users with more visibility into the model’s decision-making process.

These above advancements position GPT-4o as a strong competitor to Claude 3, which has its own strengths in context window size and a focus on transparency and control.

Will Anthropic Add Equivalent GPT-4o Features to Claude Models?

Anthropic has not announced plans to add these features to Claude models, but they may do it in the future.

At the moment, GPT-4o offers several features that set it apart from Claude 3, especially the real-time voice interaction. As GPT-4o raises the bar for what users expect from AI assistants, Anthropic may feel pressure to incorporate similar features into future iterations of Claude.

Anthropic’s focus on AI safety and transparency may influence their approach to adding new features. The company has emphasized the importance of developing AI systems that are safe, ethical, and aligned with human values. As they consider expanding Claude’s capabilities, they will likely prioritize features that align with these principles.

Those who require the advanced features offered by GPT-4o may find OpenAI’s latest model to be the better choice.